In the last few days I completed the second stage of Scholarship Program the’s 12-week CXL Institute, in which I study the Growth Marketing Minidegree .

As shown in the last text, the minidegree is divided into the following steps:

- Growth marketing foundations

- Running growth experiments

- Data and analytics

- Conversion

- Channel-specific growth skills

- Growth program management

- Management

- Final exam — growth marketing

This last week, I started the second step, entitled “Running growth experiments”, which deals with one of the fundamental bases of what we call Growth Marketing, which is the experimentation process. With the goal of This of showing skills and strategies for this process, this next step consists on 4 courses and 2 event videos, as below:

Courses

- Research and testing — Peep Laja

- Conversion Research — Peep Laja

- A / B testing mastery — Ton Wesseling

- Statistics fundamentals for testing — Ben Labay

Event videos

- A better way to prioritize A / B tests — Leho Kraav

- What to test next: prioritizing your tests — P. Marol & J. Foucher

The experimentation process is one of the fundamental pillars that should guide marketing for a company. Nowadays, potential consumers of a product are constantly subjected to a series of stimuli from the most varied sources. With technological advances, such stimuli generate a lot of new information about how the consumer responds to the brand/ message.

The experimentation process is crucial for the efficient handling of all this new data. And this is what the second module of Growth Marketing Minidegree is all about. This week, I followed two courses from this stage, both taught by Peep Laja, one of the most respected CRO professionals in the market, and also founder of the CXL Institute. The following is a brief analysis of the courses Research and testing and Conversion Research.

The instructor starts the course with a disclaimer about optimization, listing three important points to take into account before we start. They are:

- Test (or make) more effective changes: we want to know if we are going to test relevant hypotheses and if the results of these tests will turn into effective results.

- Reduce the duration (and cost) of optimization: optimization ideas are often expensive and / or costly. Both scenarios are undesirable in the optimization process.

- Improve the speed of experiencestion: test, test, test. Relevantly, of course. High frequency of testing increases the speed of experimentation and results in faster results.

“every day without a test running on a page/layout is regret by default.” — Peep Laja

How should we optimize a website?

We often come across different tips on how to optimize different parts of a business. There are best practices for building landing pages, best practices on content production, best practices on interface design, among many others. However, best practices take us only so far. The best practice should be the starting point. From there, we must analyze what are the techniques and tests that should be applied, always taking into account that the excess of applied techniques can be harmful and unproductive. Prioritization is important. For this, it is important to avoid precious time in fruitless tests. The ResearchXL Framework can assist in this direction.

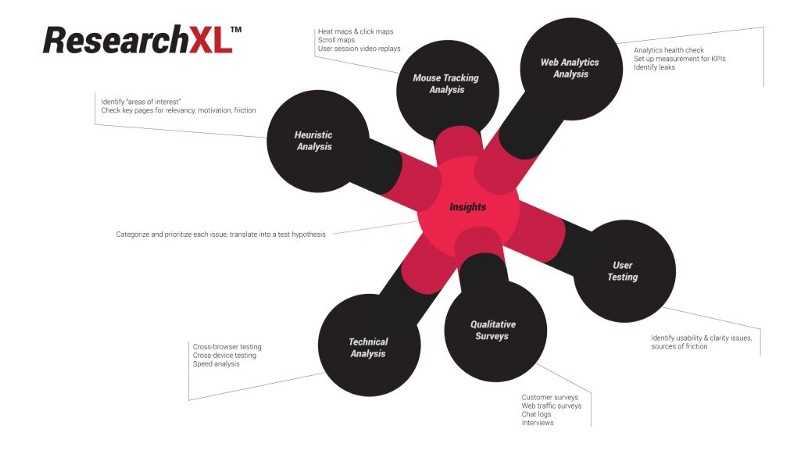

ResearchXL Framework

A structured view of how to list, organize and prioritize tests is important to maintain efficiency in the optimization process. A successful test program comes from the combination of a high volume of hypotheses and the number of conclusive tests, and such conclusions do increase the value of the business. To achieve this goal, the ResearchXL Framework was created, which helps to identify problems and opportunities on a website and turn them into testable hypotheses:

The model indicates 6 steps to collect data and perform analyzes, in order to transform the points raised into actions. They are:

- Technical Analysis: specifically identify what is not working on the page, mainly in terms of speed and compatibility (devices and browsers).

- Heuristic Analysis: determine relevance, clarity, motivation and friction about the problems encountered.

- Web Analytics Analysis: create KPIs that are able to identify where the vulnerabilities are and that can be monitored in order to check the health of the site.

- Mouse Tracking & form analytics: create tracking to know what the user is doing on the site. Heat maps, click maps, scroll maps, session replays, among other tools.

- Quantitative Surveys: develop and monitor questionnaires with users- in order to clarify what are the types of existing customers, what problems they seek to solve, what have prevented them from buying, among others.

- User Testing: testing with users in order to identify which part of the message is not being correctly transmitted and which barriers are being created in the conversion process.

Following this structure, it is be possible to create a process that optimizes conversion effectively, which, in a well-defined way, consistently seeks new growth opportunities. The conversion rate optimization model will be successful when it is able to inform:

- where the problems are

- what are the problems

- why do these issues exist

- how to turn them into hypotheses

- how to guide prioritization and instant corrections

With the model well defined, we can focus our efforts on testing efficiency. The prioritization of the issues must be based on two main criteria:

- degree of difficulty: it is necessary to consider how much of your resources will be invested to verify a hypothesis, whether monetary or even time (often underestimated resource)

- expected impacts: how impactful the business will resolve this issue.

To help with the handling of the tests, the instructor also lists 12 testing errors that he frequently sees,:

- Precious time wasted on stupid tests: in general, irrelevant items, without research.

- You think you know what will work: being guided by best market practices or highly disseminated knowledge, we may fail to carry out tests that are important to the specific reality of our business.

- You copy other people’s tests: it is important to look for solutions for the problems that your site has. Some inspiration from other tests is allowed, but we have to stick to solving the specific challenges of our business.

- Your sample size is too low: statistical relevance is crucial and can completely invalidate a test when the relevance isn’t enough.

- You run tests on pages with very little traffic: from the beginning of the strategy it is important to be clear about the data that will be generated in a test and its potential statistical validity.

- Your tests don’t run long enough: even for sites with tens of thousands of conversions per week, short tests can be influenced by external factors and incorrectly validate a hypothesis. It is important to minimize the existence of outside factors to eliminate statistical inconsistencies.

- You don’t test full weeks at a time: testing broken weeks can result in statistical inconsistency due to the seasonality of the week. It is important to test whole weeks and, preferably, less than 4 weeks, in order to avoid sample pollution.

- The data is not sent to third party analytics: testing tools can generate false positives. It is important to have control of the conversion data in some third party tool such as Google or Adobe, in order to understand how your user’s behavior on the site is actually.

- You give up after your first test for a hypothesis fails: as long as relevant problems to be solved and data that can indicate your solution, it is important to continue testing new solutions.

- You’re not aware of validity threats: history effect, instrumentation effect and selection effect. The solution to the corrected problem can bring other complications that hinder the validation of the implemented correction.

- You’re ignoring small gains: increasing conversion consistently is important. The long-term composite effect shows how relevant small gains can be.

- You’re not running tests at all times: every day without running a test is a certain loss. Not only for the verified hypotheses, but also for the opportunity cost of not doing so.

These were some of the points learned during the second week studying the Growth Marketing Minidegree at CXL. Next week, I should go deeper into subjects also related to the experimentation process, but in a more instrumental way, dealing with A / B tests and the statistical fundamentals needed to support decision making and organize the optimization process systematics.